AI has become unavoidable in capital raising.

Over the last year, capital raisers have faced growing pressure to move faster, respond to investors quicker, reduce legal costs, and streamline increasingly complex workflows.

AI promises all of that. And in many cases, it delivers. But in capital raising, speed without guardrails can quietly turn into risk.

At Avestor, we’ve had a front-row seat to how AI is actually being used across hundreds of funds and private offerings. What we’ve seen is not a question of whether capital raisers should use AI, but how they should use it without creating legal, regulatory, or reputational exposure.

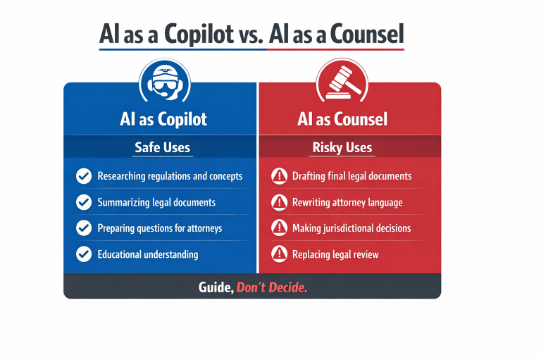

As Sanjay Vora, Founder and CEO of Avestor, discussed during our recent leadership webinar: “AI is a copilot, not counsel.”

That distinction is the difference between leverage and liability.

The Opportunity to Use AI Is Real

AI adoption in capital raising isn’t happening in a vacuum.

Legal costs continue to rise. Regulatory complexity keeps increasing. Investors expect faster responses, clearer documentation, and more transparency than ever before. At the same time, AI tools have become incredibly accessible, often producing confident, polished outputs in seconds.

Avoiding AI entirely is no longer realistic. In fact, capital raisers who don’t use AI at all are already at a disadvantage.

The risk isn’t using AI.

The risk is trusting it in the wrong places.

Why AI Feels Smarter Than It Actually Is

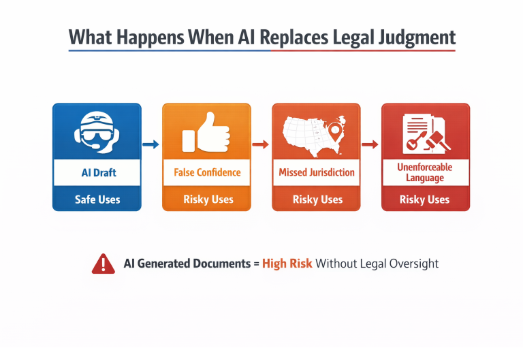

AI’s biggest strength can also be its biggest weakness: portraying a sense of confidence.

AI tools respond with clarity, friendliness, and certainty. That tone makes it easy to assume the answer is correct, especially when it sounds logical and well-written. But in legal and regulatory environments, confidence is not accuracy.

AI systems are trained primarily on publicly available data. In areas like securities law, court rulings, and state-level regulations, much of the most important information lives behind paywalls, in court dockets, or in recent rulings that AI engines may not have access to.

This creates a dangerous gap between how sure AI sounds and how current or applicable its guidance actually is.

As Sanjay explained:

“AI gives people a false sense of confidence. Judges don’t care how a document was generated. They only care about what’s written.”

— Sanjay Vora

In capital raising, that difference matters.

The Three Biggest Legal Risks Fund Managers Don’t See Coming

1. Jurisdiction Blindness

AI does not truly understand jurisdictional nuances.

Securities laws vary dramatically between states. What may be acceptable in Texas can be problematic in Oregon, California, or New York. AI often provides generalized guidance without recognizing which jurisdiction applies, or how recent rulings may have changed enforcement standards.

That gap is where real exposure begins.

2. Subtle Language Changes with Material Impact

AI often rewrites legal language to sound clearer or more conversational. While that may read better, it can materially weaken enforceability.

We’ve seen AI-generated edits remove protective clauses, soften obligations, or unintentionally change the legal meaning of a paragraph, all while making the document appear “improved.”

In legal documents, sounding better is not the same as holding up in court.

3. No Liability, No Safe Harbor

AI has no professional liability coverage. There is no regulatory safe harbor for AI-generated documents.

If something goes wrong, accountability doesn’t shift to the tool. It stays with the issuer, promoter, or fund manager, regardless of how the document was created.

Real-World Failures We’ve Already Seen

These risks aren’t theoretical. We’ve seen them play out repeatedly.

AI Overriding Attorney Judgment

In one case, a capital raiser used AI to review attorney-drafted documents and attempted to force the attorney to replace carefully constructed language with AI-generated edits. The attorney refused, correctly, because accepting those changes would have increased both client risk and professional liability. The result was unnecessary tension and delay, driven entirely by misplaced trust in AI.

AI-Generated Operating Agreements

We’ve also seen operating agreements drafted entirely by AI. They looked polished and complete, but missed jurisdictional requirements, included unenforceable provisions, and failed to meet basic legal standards. These documents were flagged before being used, preventing serious downstream exposure.

Confidential Data Leakage

In another case, business partners shared a single AI account to save costs. When a dispute arose, one partner could see the other’s confidential prompts and strategy through the shared AI history, effectively handing over sensitive information during a conflict.

Each of these situations started with good intentions and ended with unnecessary risk.

The Right Way to Use AI in Capital Raising

AI can be incredibly powerful when used responsibly.

The most effective capital raisers treat AI as an educational and preparatory tool, not a decision-maker.

Best practices we consistently see work well:

- Use AI to understand legal language, not finalize it

- Summarize documents before discussing them with counsel

- Upload only specific sections, not full confidential agreements

- Establish mandatory legal review for anything investors will sign

- Train internal teams on where AI use stops

When used this way, AI increases efficiency without increasing exposure.

Why This Matters More as You Scale

The risks of AI misuse compound as you raise capital repeatedly.

Each new fund, SPV, or offering increases scrutiny. Small shortcuts that go unnoticed early can become serious issues as investor counts grow; audits occur, or disputes arise.

This is where structure, systems, and guardrails matter more than tools alone.

As Badri Malynur, Co-Founder of Avestor, noted:

“Most tech platforms won’t help with the business and strategic support we provide, even when it takes time.”

— Badri Malynur

That difference becomes more important, not less, as you scale.

AI Is Inevitable. Discipline Is Optional.

AI will continue to reshape capital raising. Capital Raisers who use it thoughtfully will gain real advantages in speed, clarity, and execution.

But the winners won’t be the ones who move fastest at all costs.

They’ll be the ones who move faster with discipline.

AI belongs in the cockpit, helping you navigate, summarize, and prepare.

It doesn’t belong in the pilot’s seat.

If you treat it that way, AI becomes leverage, not liability.

Thinking Through How AI Fits Into Your Capital Raising Process?

If you’re raising capital repeatedly, the real advantage isn’t just using AI; it’s having the right guardrails around it.

If it’s helpful, our team is always open to a conversation about how other capital raisers are using AI responsibly, where issues tend to surface, and how to structure your process to scale without creating unnecessary risk.

You’re welcome to book a conversation with Avestor to explore what that could look like for your next raise.